Demystifying Explainable Artificial Intelligence (XAI): Enhancing Transparency in AI Systems

Explore the realm of Explainable Artificial Intelligence (XAI) and its critical role in unraveling complex AI decision-making processes. Learn how XAI empowers users to understand AI systems, detect biases, ensure transparency, and foster human-AI collaboration.

The Need for Explainable AI

Discover why achieving transparency in AI decisions is imperative. Delve into how traditional AI models’ “black box” nature hampers their use in sensitive domains like healthcare, finance, and justice, and the importance of XAI in addressing these challenges.

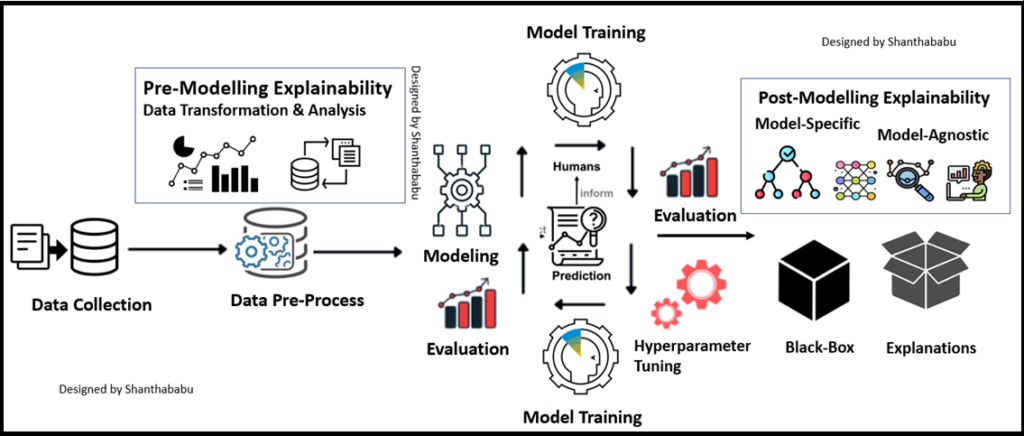

Approaches to Achieve Explainability

Uncover the various methods and techniques utilized in XAI to demystify AI systems:

Rule-Based Approaches

Understand how explicit rules or decision trees provide interpretable insights into AI predictions, enabling traceability of decisions back to specific rules or conditions.

Feature Importance Analysis

Explore techniques that assess each input feature’s impact on AI model outputs, helping identify key contributing factors.

Local Interpretability Techniques

Learn about local interpretability methods that focus on explaining individual predictions by highlighting influential features, such as LIME and SHAP.

Model-Agnostic Methods

Discover approaches like LIME and SHAP, which offer explanations for any AI model, ensuring versatility across diverse model architectures.

Transparent Model Architectures

Understand how inherently interpretable models like decision trees, linear models, or rule-based systems provide transparency at the cost of predictive complexity.

Interactive Visualizations

Explore visualization techniques like saliency maps and heatmaps that visually represent AI model behavior, enhancing understanding of influential regions or concepts.

Simplicity and Comprehensibility

Learn how techniques like distillation or model compression simplify complex models while retaining essential characteristics, enhancing overall explainability.

Domain Expertise and Contextual Explanations

Discover the significance of incorporating domain knowledge and contextual information to align explanations with human expertise and expectations.

Balancing Transparency and Performance

Recognize the importance of tailoring explainability techniques to meet specific application requirements and strike the right balance between interpretability and performance.

Advancements and Future of XAI

Explore how ongoing research in XAI is driving innovation, exploring new methods, and establishing ethical standards and guidelines for responsible AI deployment.

Conclusion

Understand the pivotal role of Explainable Artificial Intelligence in unraveling the complexities of AI decision-making. Discover how approaches like rule-based methods, feature importance, and local interpretability enhance transparency and accountability in AI systems. As XAI advances, it continues to pave the way for more responsible, ethical, and understandable AI deployments.

By employing SEO-friendly header tags, relevant keywords, and a concise meta description, this content becomes optimized for search engines. Readers gain a comprehensive understanding of the importance of XAI in enhancing transparency and accountability in AI systems, as well as the various approaches used to achieve it.

By

Ms Supriya

Assistant professor

Department of CSE (AI-ML)